De Kraker: "If OpenAI is right on the verge of AGI, why do prominent people keep leaving?"

See full article...

See full article...

Okay, yes.no, the opposite. If you found the brain efficiently doing something computationally intractable, then you might either discover that P really equals NP, or that turing-machine computation doesn't describe how brains work, or who knows what?

the thing is, though, that this is falsifiable science, not opinion. The result, either way, could be amendable to review and reproduction.

Not really. Even then scientists were predicting warming. Though there was no wide consensus.opinion polling scientists on AGW in 1970 might not have had the result you were looking for

This is a red herring. Any basic AI can easily imitate the functions of c elegans almost exactly - except the part of it that evolves. But that's more a function of natural selection than c elegans itself.The nematode C. elegans has 302 neurons and lives in the wild, feeding on certain bacteria, and displays waking and sleeplike states. I wonder how an LLM model with 302 parameters would function.

Yes, because correlation is not causation. They didn't know why those elements reacted with each other, just that they reliably did, and when they observed that other elements had similar properties they predicted that they would react in a similar way, and they did.So your evidence that you don't need to understand something to predict it is a list of historical examples of people gaining an understanding of something through methodical observation and then using that understanding to predict the behavior? Are you serious with this shit??

There was consensus among scientists who were studying the topic. The issue was that most were not and still weighed in on the subject with an 'I don't know', which instead of meaning 'that's not my field' we retcon to mean 'I've studied the evidence and it's inconclusive'.Not really. Even then scientists were predicting warming. Though there was no wide consensus.

A brain can grow. And it's unclear if the growth of the brain is related to learning/activity. It is unlikely that computation will be able to replicate the conditions in a meaningful enough way to ever do the experiment.Okay, yes.

If you want to prove a brain is not a computer, you come up with something a brain can do, but a computer can't.

this is news to me, got a paper on that?This is a red herring. Any basic AI can easily imitate the functions of c elegans almost exactly - except the part of it that evolves. But that's more a function of natural selection than c elegans itself.

In the last 50 years we stopped ignoring at least some of the environmental impacts of the car. There are plenty we continue to ignore. Additionally, the recurring costs of building around cars is increasingly being felt.What do you think has changed in the last 50 years that would make electric cars unsustainable?

I do not like cars, but most folks seem to think they are great.

Sure, this is now almost a year and a half old -this is news to me, got a paper on that?

Haha, sure.condescend to me again.

That's a lot of projection to unpack on your part, there, hoss.Haha, sure.

[playground taunting redacted]

Replicate what-- growing? learning? I don't think there's any reason to try to perfectly replicate a human brain.A brain can grow. And it's unclear if the growth of the brain is related to learning/activity. It is unlikely that computation will be able to replicate the conditions in a meaningful enough way to ever do the experiment.

on the other hand LLMs "poop out words in an order that" has more sense than what comes out of influencers, celebrities and conspiracy theorists. i know, i know, not a high bar to clear but they have at least that going for them.LLMs are nowhere near AGI. They are nowhere near even approaching bacteria in terms of being able to interact with their environment and adapt beyond their training.

They poop out words in an order that is not always nonsensical.

Literally the CA DMV requires all licensed AV operators to release accident/incident statistics and descriptions as well as "miles per disengagement" statistics. Tesla is not a licensed AV operator. Waymo, however, is, and their statistics are much better than humans. I don't think they have had an accident with human injury yet after millions of miles of testing. They also averaged like low thousands of miles per disengagement (like 1500-2500 iirc).I understand this; these AI systems don't deal well with things they didn't see in training. But how many humans have had a serious accident while they were driving a car? What we really need to compare is a figure like miles per serious accident, averaged over all American (for example) drivers vs. some autonomous system. I just haven't seen that comparison.

If I'm wrong, and the comparison is out there, please point me to it.

Another interesting comparison would be drivers with a given blood alcohol level vs. these autonomous systems. There are lock-out systems that won't allow a human to drive if they breathe into a device and the device detects alcohol above a certain level; this is sometimes used for drivers who have had DUIs. Perhaps instead of preventing them from driving the car could take over.

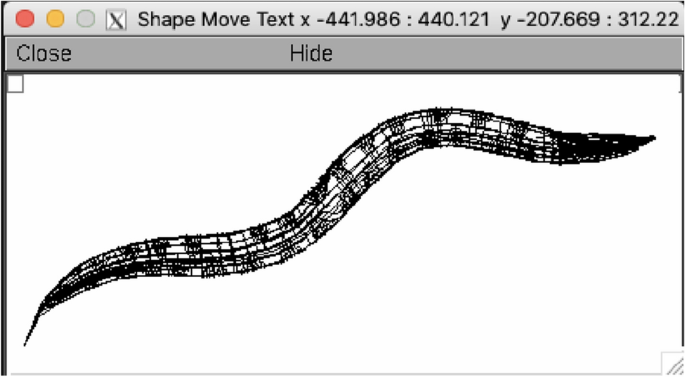

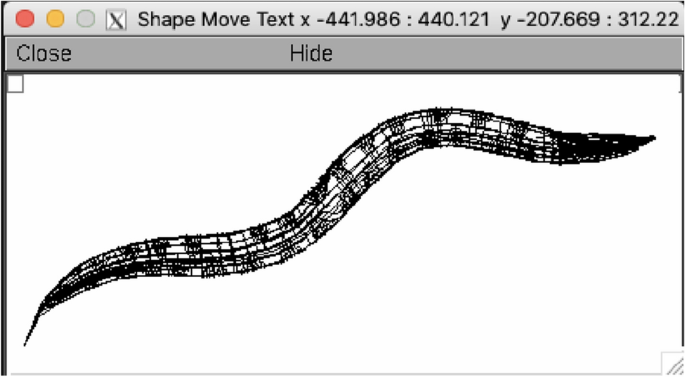

Learning the dynamics of realistic models of C. elegans nervous system with recurrent neural networks - Scientific Reports

Given the inherent complexity of the human nervous system, insight into the dynamics of brain activity can be gained from studying smaller and simpler organisms. While some of the potential target organisms are simple enough that their behavioural and structural biology might be well-known and...www.nature.com

"These results nonetheless show that it is feasible to develop recurrent neural network models able to infer input-output behaviours of realistic models of biological systems, enabling researchers to advance their understanding of these systems even in the absence of detailed level of connectivity."

In this work we propose a methodology for generating a reduced order model of the neuronal behaviour of organisms using only peripheral information

I'm not saying that it is there yet - just that it has shown that it is technically feasible. There is an opensoure project that I contributed to in the past (which models c. elegans) but one reason I stopped contributing is that it wants to model every part of the worm (down to the molecular level) and not just the brain itself - I wasn't as interested in that even though it is a noble cause:this wasn't a full model, and didn't even attempt to fully model the nervous system of c. elegans. The paper stated in the beginning that full simulation is too slow/resource intensive. The paper took a traditional, non-ML, biological simulation and used it to generate training data for an artificial neural network. It aimed, only, to reproduce neural output from inputs, and made no attempt to use ML to model the internal state of the neurons themselves.

This work is a very long way from fully replicating the behavior of actual c. elegans.

Where do you get 10+ years from? I see absolutely no indication whatsoever that anyone has any idea how to get from what exists today, which is a fancy auto-complete, to actual reasoning. The failure of ChatGPT to deal with even the simplest logic puzzles is not some bug or minor shortcoming to be fixed, it's indicative of us not even moving in the direction of AGI. All we have is a word salad generator which often generates something that isn't obvious nonsense.Because Sama and some others have lied to investors that they could achieve human-like intelligence within 5 years by mostly scaling up feed-forward neural-networks and the data used to train them. At the very least he promised them that hallucinations and basic reliable reasoning would be "done" pretty soon.

In reality these things will take 10+ years and will require new architectures like IJEPA or others. Probably many more fundamental advances are required.

Any investors that can wait for 10+ years will be OK, as long as the AI company they invested in survives and moves on to the next thing successfully. Many will take a soaking when the LLM/VLM bubble pops (gonna happen within 5 years, maybe a lot sooner).

EDIT: And some people don't want to be there when it starts raining.

but that is what you said:I'm not saying that it is there yet

This is a red herring. Any basic AI can easily imitate the functions of c elegans almost exactly

Forced is a bit much. It is our tendency because the country is large and was built up after people had cars. But individuals also choose long commutes, road trips, distant visits. And they choose large, shiny, and fast vehicles.Most people think they're great because they have a job they need to get to, and we've culturally accepted that spending $47,000 (median) is a reasonable thing to do for that job. Marketing helps us rationalize that decision by making the car an extension of your personality. So long as American society prevents people from seeing a viable alternative, what choice do they have but to love their car. It's so flexible that even if they refused to use it to get to a job, they can then live in it when they lose their home.

If you want to prove a brain is not a computer, you come up with something a brain can do, but a computer can't.

If you want to prove that brain is a computer, you get a computer to do that thing.

So your evidence that you don't need to understand something to predict it is a list of historical examples of people gaining an understanding of something through methodical observation and then using that understanding to predict the behavior? Are you serious with this shit??

I think you could produce safety comparable to human drivers by strict adherence to the road rules including driving prepared to give way to anything with, or which could acquire, right of way over you. It would still make mistakes, but while the situations would be different the overall severity would probably be better. Unfortunately the driverless car industry appears to be trying to implement the monkey’s paw version of that by lobbying to get the road rules changed so that everyone else has to stay out of their way.It's the same problem facing autonomous vehicles -- the vehicles have to safely interact with irrational and unpredictable humans. The only way they can do that is if they are able to understand and predict human behavior, which, by necessity, means that they are just as complex, just as aware and cognizant as humans

Yes, I agree. I was being a bit flippant, which maybe I shouldn't here.The second one, no.

So if you were, hypothetically, able to prove a negative, using some kind of unspecified evidence, would that prove anything?Also, I don't see why this would be.

If I were able to prove to you the brain isn't doing some computationally impossible thing, would that prove to you the brain is a computer?

It sure would have.opinion polling scientists on AGW in 1970 might not have had the result you were looking for

This is a statement of faith. Like, well, nobody flipped as high as Simone Biles and everyone said it couldn't be done, so if she can flip 12 feet, there's really no reason the next GOATed gymnast can't fucking levitate with the power of her mind and soar a hundred feet in the air.Yes, I agree. I was being a bit flippant, which maybe I shouldn't here.

What I meant is: that's how the conversation has gone historically and how it will continue to go. Some not-great philosopher comes up with an impossible task. Then some engineer builds a computer that can do the impossible task.

There's no actual proving that occurs. The philosopher is wrong. And people will blithely agree or disagree until the machine is invented.

The same will be the case with "AGI". Whatever the hell that is.

Perfect answer. It's not and will never be able to achieve AGI, even with order of magnitude more of everything. It's just not built for that, and the journalist keeps on forgetting that. It's very poor journalism when you don't understand what's going on and can't properly identify the fallacies in PR.Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

In the past 50 years. Get in line, neural networks are still using the same backward propagation as in the 70s. they have not found anything.… in all of a year …

A bit impatient, no? This shit talking is a little dumb. The answer to why veterans are leaving OpenAI probably has more to do with Sam Altman being a reckless dick and the fact that these same people can work on AGI at other companies in a more ethical way. Or have more money, power, and/or interesting work at a younger venture. OpenAI is far from the only game in town.

Many of the veterans are far more concerned about “sci-fi fantasies” than most people here, and are more than a little unsettled to be working on what they perceive to be more dangerous than the thermonuclear bomb, as fascinating as it might be. They might also be burnt out and wishing to live more of their ordinary life while they still have the chance. Of course most people here think they’re wacko for thinking these things.

So if you were, hypothetically, able to prove a negative, using some kind of unspecified evidence, would that prove anything?

That is quite literally not how this works.

It sure would have.

This is a statement of faith. Like, well, nobody flipped as high as Simone Biles and everyone said it couldn't be done, so if she can flip 12 feet, there's really no reason the next GOATed gymnast can't fucking levitate with the power of her mind and soar a hundred feet in the air.

why is that a reasonable conclusion? Nature results in lots of things we are unable to replicate.And if our organic matter can generate an AGI, then it seems fair to conclude that we'll eventually figure out how to do this with other substrates, too.

and, in a notable parallel, many scientists at the time were, in one way or another, dependent for their livelihood on some aspect of the energy or fossil fuel sector. Or, said more succinctly:We did have solid reasons to be worried about CO2, but in 1970 there were waaaaay too many loose ends still remaining. Cloud feedbacks, paleoclimate, water vapor feedback. The scientific consensus really firmed up during the 1970s and 1980s, which is why the IPCC was founded in 1988.

But don't take my word for it: you can go back and read the literature from that era, and see for yourself the caution that scientists within that field had. The modern confidence in anthropogenic climate change is hard-won, after decades of research, skeptical argument and a slow, slow accumulation of evidence.

It is difficult to get a man to understand something when his salary depends on his not understanding it.

why is that a reasonable conclusion? Nature results in lots of things we are unable to replicate.

There are phenomena in nature that are non-linear or chaotic that are simply not amenable to simulation. We don't have any well-grounded scientific theories that would tell us whether cognition is one of them, or not.

I suspect we're a lot closer to growing a 'working' brain in a vat than we are to replicating one in-silico, but such an experiment would be deeply amoral and very troubling.

With a sufficiently powerful computer we would in principle be able to run a statistical QM + CFD model of all the ion pumps, chemical flows, and so on within the brain, but I don’t know if statistical approaches would be meaningful at the neuron level or if we need a complete QM simulation (requiring an insanely powerful computer). I suspect the answer is that it would cost an insane amount even to model a nematode accurately, let alone something that has demonstrated reasoning beyond the training set without explicit guidance, such as a crow. The two questions then are how do we initialise the simulation and how much can we simplify it and still produce the a working brain.why is that a reasonable conclusion? Nature results in lots of things we are unable to replicate.

There are phenomena in nature that are non-linear or chaotic that are simply not amenable to simulation. We don't have any well-grounded scientific theories that would tell us whether cognition is one of them, or not.

I suspect we're a lot closer to growing a 'working' brain in a vat than we are to replicating one in-silico, but such an experiment would be deeply amoral and very troubling.

that's the rub, isn't it. The question at hand is, is 'eventually' within a timeframe where OpenAI, et al, are viable commercial concerns? Or NFT-like ponzi schemes.eventually

I don't know what you mean by this. Could you give an example? I understand enough why many things like weather patterns can't be predicted or perfectly replicated. But, I don't see why they couldn't be simulated or physically built though.why is that a reasonable conclusion? Nature results in lots of things we are unable to replicate.

There are phenomena in nature that are non-linear or chaotic that are simply not amenable to simulation. We don't have any well-grounded scientific theories that would tell us whether cognition is one of them, or not.

Sort of like charging electric cars. That first 80% gives you a lot of utility.I think it's because the young'uns dont yet realize the "80% of the way there!" is the easy part, and it's the last 20% that takes all the time (or proves to be impossible).

"Geeze, five years later and now we're only 81% of the way there..."

i would like to see a reference to this research if you have it.However let me end with a fun fact. The human brain has like 100 trillion synapses which in our current understanding are most analogous to parameters in a model. However, recent research has suggested that the microtubules in neurons could also do computations inside each neuron. Each neuron has billions of microtubules. That could mean we need to model more like 100 million trillion or more parameters.