De Kraker: "If OpenAI is right on the verge of AGI, why do prominent people keep leaving?"

See full article...

See full article...

Like with a practical fusion reactor. The latest results after 92+ years of research is a 5-second-long reaction.I think it's because the young'uns dont yet realize the "80% of the way there!" is the easy part, and it's the last 20% that takes all the time (or proves to be impossible).

"Geeze, five years later and now we're only 81% of the way there..."

Otoh, we do have AVs now. If they keep rolling out it still be one of the most revolutionary techs of our generation, just 10 years too slow.You see a similar sort of asymptotic relationship with fully autonomous road vehicles. It looked like it might be a solved problem back in 2018, and in a lot of ways the best systems seem to be 98% there. But that last 2% makes all the difference in the real world.

The main similarity is that investors in the modern tech era, between the iPhone, Amazon e-commerce, and Google search and a number of others have gotten addicted to the idea that if they invest a bit of money in just the right spot, that it can blow up and pretty quickly make them fantastically wealthy. And these are ideas that have such broad appeal and such incredible utility that they consume entire industries worth of value. Self-driving cars is one of those things that if you can crack that nut, you win a MASSIVE market, even if all you accomplish is to make the occupation of truck driver nonexistent. Same goes for AGI - it consumes so much that its inventors will hoover up a not-small share of global GDP. And they are things that if you do nail it (like Google Search), there's not really a place for competitors to operate. You just win capitalism.There seem to be many similarities between Musk's "Full self-driving" and OpenAI's "AGI". I'm not expecting either any time soon.

I think we're going to find that the problem right now isn't that gas cars are bad or that human driven cars are inefficient, but that cars carry so many secondary costs that they are unsustainable as the thing to organize society around - that applies to EVs and autonomous cars and everything else that substitutes into that role. So, you may get to autonomy just in time to see this $40,000+ forced consumption tax on society collapse under its own weight.Otoh, we do have AVs now. If they keep rolling out it still be one of the most revolutionary techs of our generation, just 10 years too slow.

No it doesn't. You are comparing parameters with connections, they are different.ChatGPT-3.5 = 175 billion parameters, according to public information

Different studies have slightly varying numbers for a human brain, but it's 1000x more: from 0.1 to 0.15 quadrillion synaptic connections. Source: https://www.scientificamerican.com/article/100-trillion-connections/ (among others)

While it's likely to require something more than just scaling up the model size, I thought this gives some clue about scale. I agree with you, perhaps the answer is "it's not" scaling.

My current favorite benchmark is trying to get them to play wordle, LLMs seem completely incapable of doing that even with the most patient and cooperative human assistant,. Even a 6 year old can play worldle better than most llms. Worse is that LLMs, unlike 5yos, can't understand that they aren't accomplishing the task. Until they can perform all cognitive tasks as well as a 6yo and many as well as an adult expert, I'll be unconvinced we are approaching agi.

Great comment. I think Meta's philosophy of open-weighting their models that more or less perform at GPT-4s level puts OpenAI and other general AI companies in a pretty tough spot. Where do you go from here if you're them? Spend billions on GPT-5 that is better at generalist "knowledge" and asymptotically approaches only outputting the "smartest" stuff that's in its training set? Pretty cool to play around with but still not necessarily a product. I would say that Apple Intelligence is the most compelling use case of AI yet and it's not really a cutting edge model by many standards. It doesn't need to be. I'm not really sure what breakthrough in AI would get me excited again but I'm very curious to play around with Llama 3.1.I've argued this elsewhere so I'll offer it up here as well.

OpenAIs near-term problem is they are clearly chasing AGI and believing that ChatGPT serves as a MVP on that path (I don't think it is, but they do). The problem is that they need to be a functional business to pursue that unless it's right around the corner (which they keep insinuating it is).

ChatGPT isn't a very good functional business, because it over/underserves most of the markets where people are willing to pay for this. At one end you have Apple's pitch which is on-device AI which can interact with your personal data, even if it can't do as much is more utilitarian. Helping me find an old email is probably more useful to more people than an AI that can both write a sonnet and a real-estate listing but won't fit locally on a phone. At the other end you have expert systems to help synthesize drugs or diagnose cancer or analyze their quarterly sales, which is also not well served by writing a sonnet or a real-estate listing and also you want tightly intergraded into your company's data which is why the open source tools are getting so much attention.

What's left in the middle doesn't seem like a particularly large business - certainly not large enough to sustain their ambitions toward building an AGI. Put aside whether you think ChatGPT is good enough or not, or whether it will or won't lead to AGI, ChatGPT is not a good enough fit for what the market wants to buy to sustain the effort - you can only get so far on VC dollars and Microsoft panic investing. You have to align the product to what consumers or enterprise wants. But the principals want AGI, and are refusing to do that, hence the conflict. Meanwhile, Apple can build their own local stuff and offering it up free to users, and open source is out there hitting the wider set of needs - both undermining the opportunity to charge the kind of money they need.

The nematode C. elegans has 302 neurons and lives in the wild, feeding on certain bacteria, and displays waking and sleeplike states. I wonder how an LLM model with 302 parameters would function.There are 100 trillion synapses in the human brain. GPT-4 has around 1.76 trillion parameters. So while I don't think we can do an apples to apples comparison between parameters to synapses - you did that comparison. So how does your math work there?

I mean I'm sorta kinda closer to technically knowledgeable (still not an AI researcher), and nothing that anyone has shown so far will make that leap. The current models are still hilariously under-capable compared to what AGI should be.Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

The problem is that AI safety is already a present concern. These models may generate bullshit, but sling enough bullshit around and some people will start to believe it. Unfortunately, most people involved in the business seem to be either camp "AI is our savior" or "AGI is coming soon with doom close behind". None seem interested in the real harms being created today.If OpenAI is no where near AGI, then it seems all the "safety" concerns are a bit unfounded. In 5 years we are going to learn that LLMs are probably not that way to achieve AGI. Purely anecdotal but from daily use of Claude and ChatGPT, i don't find Claude to be anymore safe and secure in out output than ChatGPT

I'd go with the notion of Altman being a used car salesman, investors being used car buyers, and the folks at OpenAI all know it.They're leaving because (and this is a vague recollection of some articles I read online so might be incorrect here) is Altman seems to have unrealistic goals. Could also be that those heading out don't want to be tied to any copyright suits. Or as the 2nd commenter said, money.

Or, they only went there to learn enough about how OpenAI is doing their magic and want to go chase those sweet VC dollars with their own startup.

Maybe we’re less easily impressed than you.If we extrapolate from the progress in deep learning over the last 12 years, low. Very low.

In that timespan we went from, “Holy shit, it can recognize cats slightly better than bad” to “Holy shit, ChatGPT.” How do people not understand this?

Except that at least in the case of autonomous driving, you can kinda "gate" the end state in this limited form. An autonomous car doesn't necessarily need to be able to handle every conceivable driving scenario, for instance snow. Just don't operate the cars whenI think AGI-like technology will be stuck in the "uncanny valley" for quite a while. This is where it will be close to human like intelligence but never quite close enough.

Which is not to say it won't be useful, just not human-like.

You see a similar sort of asymptotic relationship with fully autonomous road vehicles. It looked like it might be a solved problem back in 2018, and in a lot of ways the best systems seem to be 98% there. But that last 2% makes all the difference in the real world.

You don't need to fully predict human behavior for fully automated driving. You just have it react/predict how humans drive cars, and walk on sidewalks, and so on, while being predictable itself. Subway trains don't predict human behavior, and 99% of the time we interact fine with them, even when they are the surface level type. As long as the cars are predictable, and can handle the limited subset of "hey that car started moving towards my lane, maybe they're going to merge", "hey that pedestrian just stepped into a crosswalk, I should stop" then things are generally fine.Any system that can accurately and safely interact with humans has to be able to accurately predict human actions and behaviors and must be, by necessity, at least as complex and cognizant as humans. It's the same problem facing autonomous vehicles -- the vehicles have to safely interact with irrational and unpredictable humans. The only way they can do that is if they are able to understand and predict human behavior, which, by necessity, means that they are just as complex, just as aware and cognizant as humans. An ant will never be able to understand humans or predict human action and behavior. As such an 'ant' operating heavy machinery, driving vehicles, or running a kitchen will never be safe around people. It will always be a hazard to anyone around them. The ant has to be smart enough to think like a human to be safe around humans. And if the ant can think like a human....

It depends what “there” is. Some things like speech recognition and machine translation languished at the 80% mark for a couple of decades, but are now “there” for most definitions of there, thanks to modern deep neural networks.I think it's because the young'uns dont yet realize the "80% of the way there!" is the easy part, and it's the last 20% that takes all the time (or proves to be impossible).

"Geeze, five years later and now we're only 81% of the way there..."

I'll start believing claims that AGI is "getting close" when someone has a system that doesn't completely eat itself from the inside when fed its own output.Because Sama and some others have lied to investors that they could achieve human-like intelligence within 5 years by mostly scaling up feed-forward neural-networks and the data used to train them. At the very least he promised them that hallucinations and basic reliable reasoning would be "done" pretty soon.

In reality these things will take 10+ years and will require new architectures like IJEPA or others. Probably many more fundamental advances are required.

Any investors that can wait for 10+ years will be OK, as long as the AI company they invested in survives and moves on to the next thing successfully. Many will take a soaking when the LLM/VLM bubble pops (gonna happen within 5 years, maybe a lot sooner).

EDIT: And some people don't want to be there when it starts raining.

I can imagine an attempt at AGI by using an LLM as the "processing" block. At least two more blocks would be needed though, a "state" block and an executive/goal/motivation/emotion block. Connect them all together and then get them to advance the state in the direction dictated by the goals. I'm sure the rest is just detailsSame. I don't even understand what the underpinnings of AGI is supposed to look like.

I think this is a more valid point than the other person saying "AVs must necessarily be AGI". I'm from car country, and even I wish we could all pile into microcosms of 20-story mixed-use buildings arranged directly next to each other.I think we're going to find that the problem right now isn't that gas cars are bad or that human driven cars are inefficient, but that cars carry so many secondary costs that they are unsustainable as the thing to organize society around - that applies to EVs and autonomous cars and everything else that substitutes into that role. So, you may get to autonomy just in time to see this $40,000+ forced consumption tax on society collapse under its own weight.

Sure, it’s called being ignorant of the long road to getting here.Maybe we’re less easily impressed than you.

Halvar Flake - the security researcher and entrepreneur said that the combustion engine didn't get us to the moon and similarly LLMs will not get us to AGI.Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

Yeah, it's not an issue of throwing more computational power at the problem.Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

What do you think has changed in the last 50 years that would make electric cars unsustainable?I think we're going to find that the problem right now isn't that gas cars are bad or that human driven cars are inefficient, but that cars carry so many secondary costs that they are unsustainable as the thing to organize society around - that applies to EVs and autonomous cars and everything else that substitutes into that role. So, you may get to autonomy just in time to see this $40,000+ forced consumption tax on society collapse under its own weight.

You cannot predict the behavior of anything you don't understand. To understand the behavior of an organism, you need to be at least as cognizant as that organism. It's part of why people who are not smart have such a difficult time connecting with a world that is built by and for people who are much smarter than them -- they do not have the capacity to fully grasp the concepts governing the systems around them (regardless of whether those systems are good or bad). It's also why people who are not smart gravitate towards simple answers to the complexity of the real world -- simple answers that they can understand 'make more sense' to them, even if those answers are completely wrong.You don't need to fully predict human behavior for fully automated driving. You just have it react/predict how humans drive cars, and walk on sidewalks, and so on, while being predictable itself. Subway trains don't predict human behavior, and 99% of the time we interact fine with them, even when they are the surface level type. As long as the cars are predictable, and can handle the limited subset of "hey that car started moving towards my lane, maybe they're going to merge", "hey that pedestrian just stepped into a crosswalk, I should stop" then things are generally fine.

You don't need an autonomous car to discuss the works of Plato with you. Therefore it doesn't have to be as generally intelligent as you.

You don't need to understand someone's views on moral relativism or Jungian psychology to predict whether they will step out onto the street after continuously walking in a straight line toward it without slowing down.You cannot predict the behavior of anything you don't understand. To understand the behavior of an organism, you need to be at least as cognizant as that organism. It's part of why people who are not smart have such a difficult time connecting with a world that is built by and for people who are much smarter than them -- they do not have the capacity to fully grasp the concepts governing the systems around them (regardless of whether those systems are good or bad). It's also why people who are not smart gravitate towards simple answers to the complexity of the real world -- simple answers that they can understand 'make more sense' to them, even if those answers are completely wrong.

The ant cannot and will never be able to predict the behavior and actions of humans. It will never be a 'safe' driver. It will never be a safe operator of heavy machinery. It will never be safe to run a kitchen around other people. It will always be a hazard to anyone around it. Because it cannot predict what the people around it will do next, no matter how well it 'understands' the rules that are supposed to be followed. Because people don't follow 'the rules' all the time. Not even most of the time. They're more like guidelines.

Um, we have celestial calendars that very accurately predicted eclipses and the like made by people that couldn't remotely understand why those things were happening.You cannot predict the behavior of anything you don't understand.

Another example, go try to catch a rabbit with your hands. Just do it.You cannot predict the behavior of anything you don't understand. To understand the behavior of an organism, you need to be at least as cognizant as that organism. It's part of why people who are not smart have such a difficult time connecting with a world that is built by and for people who are much smarter than them -- they do not have the capacity to fully grasp the concepts governing the systems around them (regardless of whether those systems are good or bad). It's also why people who are not smart gravitate towards simple answers to the complexity of the real world -- simple answers that they can understand 'make more sense' to them, even if those answers are completely wrong.

The ant cannot and will never be able to predict the behavior and actions of humans. It will never be a 'safe' driver. It will never be a safe operator of heavy machinery. It will never be safe to run a kitchen around other people. It will always be a hazard to anyone around it. Because it cannot predict what the people around it will do next, no matter how well it 'understands' the rules that are supposed to be followed. Because people don't follow 'the rules' all the time, or even most of the time. They're more like guidelines.

Problem with self driving cars: Many human beings are not too smart, they are very emotional and emotionally driven to do totally illogical things (drive too fast, risk their lives and the ones of others, be impatient, get angry at other car drivers "because they suck at driving" (interesting fact: It is always THE OTHERS that are bad, never the person itself)Another example, go try to catch a rabbit with your hands. Just do it.

The rabbit is not as smart as you (or are you going to seriously make the argument that a rabbit is as smart as a human?). And yet, it knows plenty well enough how to run away from you. How can this be that the rabbit predicted your movements?!? Perhaps it's because you don't have to fully understand something to avoid killing or being killed by it.

That or you are seriously arguing that a rabbit must understand literature and science in order to run away when you approach it.

The same applies to autonomous cars; they don't have to be as smart as us, they just have to be smart enough to not run us over.

Hold on here. Autonomous cars are not safe. They might be safer, but they are not safe. You're describing a physical space (physicist here) where each object in the space is measured, assigned a potential velocity vector that they are either demonstrating or could be demonstrating before the next sample, which produces a potential impact cone with that object. This is done for everything (pedestrians, cars, cyclists, dogs, kids, etc.) in the scene and for the vehicle being driven with an additional calculation for how quickly the vehicle could slow down/turn/etc if needed. And the vehicle would proceed ensuring that none of those potential impact cones intersect. And you're arguing that self-driving vehicles do this. And they don't. They cheat. That's part of why this is hard to solve.You are making nonsense arguments. Physical interactions, and especially physical interactions in the realm of streets and cars are a small subset of human behavior and conscious breadth. And you hardly even need to "predict" anything, autonomous vehicles can measure your exact distance and velocity a hundred times a second and just react as needed. Their reaction times far outstrip the blob of goo between your ears.

In any case, this argument is already dead: we already have autonomous cars. They operate every day in SF. And at least in the case of Waymo, they cause relatively little harm. Sure there have been some cringe-worthy screwups, like the ambulance thing, but that was easily solved by updating the software and also collaborating with local emergency responders to design a system to allow them to redirect autonomous cars around ongoing incident areas.

You are making an assertion without any supporting facts. They DID understand the movements of celestial bodies. They understood it quite well.Um, we have celestial calendars that very accurately predicted eclipses and the like made by people that couldn't remotely understand why those things were happening.

There is a difference between being able to predict something, and being able to defend why the prediction is valid, which is what I think you're describing.

To use a current event, pollsters are pretty good at predicting the outcome of an election, but they are all over the goddamn map in their explanations for why voters are voting that way to the degree that it's almost impossible to work out which, if any of them, are correct. But the polling stands.

I've WATCHED rabbits flee predators. On many occasions. They're not predicting anything. They're just running in a direction where they don't see a threat. It's effective because they're also faster and more agile than most predators. But that is also precisely how they get caught by predators who can and do very much understand how rabbits flee. More to the point, Native American hunters who have grown up learning how to hunt small game absolutely can and do catch rabbits by understanding and predicting their flight behavior. Heck, I've come to understand a little bit about it and can reasonably predict how the rabbits who live around me will react and where they'll go, even if I'm not fast enough to put it to any sort of practical use (were I so inclined, which I'm not).Another example, go try to catch a rabbit with your hands. Just do it.

The rabbit is not as smart as you (or are you going to seriously make the argument that a rabbit is as smart as a human?). And yet, it knows plenty well enough how to run away from you. How can this be that the rabbit predicted your movements?!? Perhaps it's because you don't have to fully understand something to avoid killing or being killed by it.

That or you are seriously arguing that a rabbit must understand literature and science in order to run away when you approach it.

The same applies to autonomous cars; they don't have to be as smart as us, they just have to be smart enough to not run us over.

No they didn't. You're just saying the same thing I said with different words.You are making an assertion without any supporting facts. They DID understand the movements of celestial bodies. They understood it quite well.

Also worth pointing out that the overwhelming majority of scaling “laws” aren’t.Scaling laws don't matter if you are running out of training data, as some researchers suggest (like this recent paper on arxiv https://arxiv.org/html/2211.04325v2).

The nematode C. elegans has 302 neurons and lives in the wild, feeding on certain bacteria, and displays waking and sleeplike states. I wonder how an LLM model with 302 parameters would function.

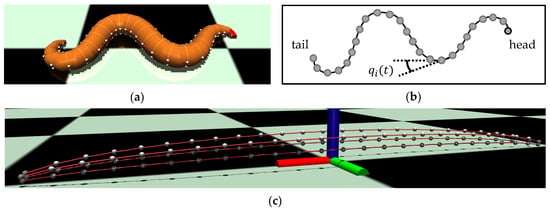

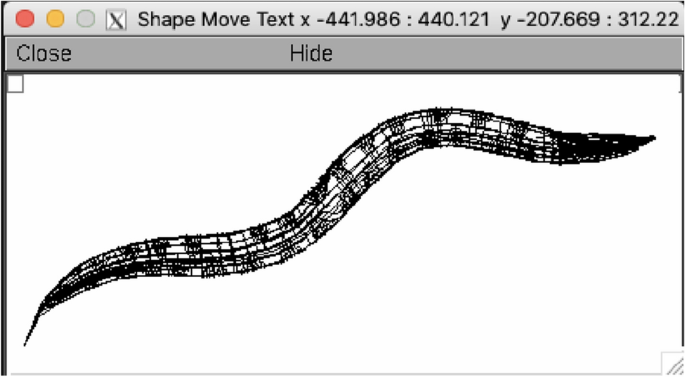

This study presents a novel model of the digital twin C. elegans, designed to investigate its neural mechanisms of behavioral modulation. The model seamlessly integrates a connectome-based C. elegans neural network model and a realistic virtual worm body with realistic physics dynamics, allowing for closed-loop behavioral simulation. This integration enables a more comprehensive study of the organism’s behavior and neural mechanisms. The digital twin C. elegans is trained using backpropagation through time on extensive offline data of chemotaxis behavior generated with a PID controller. The simulation results demonstrate the efficacy of this approach, as the model successfully achieves realistic closed-loop control of sinusoidal crawling and chemotaxis behavior.

By conducting node ablation experiments on the digital twin C. elegans, this study identifies 119 pivotal neurons for sinusoidal crawling, including B-type, A-type, D-type, and PDB motor neurons and AVB and AVA interneurons, which have been experimentally proven to be involved. The results correctly predicted the involvement of DD04 and DD05, as well as the irrelevance of DD02 and DD03, in line with experiment findings. Additionally, head motor neurons (HMNs), sublateral motor neurons (SMNs), layer 1 interneurons (LN1s), layer 1 sensory neurons (SN1s), and layer 5 sensory neurons (SN5s) also exhibit significant impact on sinusoidal crawling. Furthermore, 40 nodes are found to be essential for chemotaxis navigation, including 10 sensory neurons, 15 interneurons, and 11 motor neurons, which may be associated with ASE sensory processing and turning behavior. Our findings shed light on the role of neurons in the behavioral modulation of sinusoidal crawling and chemotaxis navigation, which are consistent with experimental results.

With his announcement that Tesla is an AI company, his entire fortune is now based on pumping this meme quite literally for all he is worth.I find irony in all these announcements that “OpenAI will definitely have magic AI results any minute now, y’all” being posted on Elon Musk’s website.

I'd certainly agree that the + seems to be the missing element. What we have at the moment is a shockingly good natural language interface. The method of creating that interface has encoded significant amounts of human knowledge, including best practice, within it. Large parts of that knowledge are retrievable, with perhaps improving levels of accuracy. Agency, understanding, and application of knowledge seem to be capabilities that require more than an LLM, however complex.Basically it is induction based on emergent behaviour and test performance seen already from simply scaling (more data and more parameters). Many AI researchers are skeptical, but on the other hand the progress already seen has been pretty shocking. Most AI researchers think at a minimum it will have to be a combination of LLM+search; LLM+symbolic reasoning; LLM+planner; or more likely more complex designs etc. and plenty believe that additional breakthroughs are needed.