De Kraker: "If OpenAI is right on the verge of AGI, why do prominent people keep leaving?"

See full article...

See full article...

Same. I don't even understand what the underpinnings of AGI is supposed to look like.Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

The point in the article is that these people have a large stake in OpenAI and would benefit massively if OpenAI cracks AGI.Because money?

OpenAI and others have had a long pattern of raising funds and then those folks not in the immediate founders circle of big money ... move on to other companies raising funds where they can get in on that money and work on their own projects.

With all the money going into AI companies it makes sense that employment is very fluid regardless of breakthroughs or not.

ChatGPT-3.5 = 175 billion parameters, according to public informationHaving followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

Here's a really interesting discussion of exactly that subject:Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

I doubt they're leaving because of Copyright infringement, if that was the case, they wouldn't be moving to Anthropic.They're leaving because (and this is a vague recollection of some articles I read online so might be incorrect here) is Altman seems to have unrealistic goals. Could also be that those heading out don't want to be tied to any copyright suits. Or as the 2nd commenter said, money.

Or, they only went there to learn enough about how OpenAI is doing their magic and want to go chase those sweet VC dollars with their own startup.

The moves have led some to wonder just how close OpenAI is to a long-rumored breakthrough of some kind of reasoning artificial intelligence

I think that what you are missing is that no matter how true that is, it won't open the pockets of investors ;-)Same. I don't even understand what the underpinnings of AGI is supposed to look like.

I work with and get LLMs to some extent, but more LLM is still just more math, vectors ... output. More LLM is just more LLM, not necessarily magically different or any fewer fundamental shortcomings.

(Emphasis mine)Schulman’s parting remarks quoted in the last paragraph of the article said:Despite the departures, Schulman expressed optimism about OpenAI's future in his farewell note on X. "I am confident that OpenAI and the teams I was part of will continue to thrive without me," he wrote. "I'm incredibly grateful for the opportunity to participate in such an important part of history and I'm proud of what we've achieved together. I'll still be rooting for you all, even while working elsewhere."

a lot of computer science and cognitive science academics also say "it's not".Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

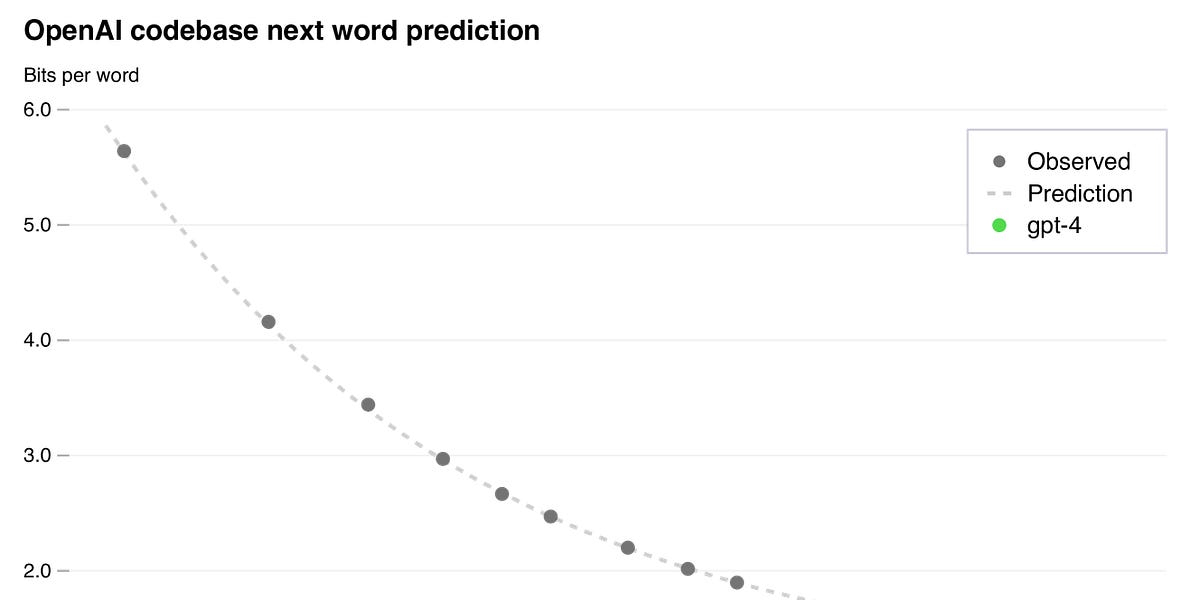

Microsoft CTO Kevin Scott has countered these claims, saying that LLM "scaling laws" (that suggest LLMs increase in capability proportionate to more compute power thrown at them) will continue to deliver improvements over time

ChatGPT-3.5 = 175 billion parameters, according to public information

Different studies have slightly varying numbers for a human brain, but it's 1000x more: from 0.1 to 0.15 quadrillion synaptic connections. Source: https://www.scientificamerican.com/article/100-trillion-connections/ (among others)

While it's likely to require something more than just scaling up the model size, I thought this gives some clue about scale. I agree with you, perhaps the answer is "it's not" scaling.

Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it' not"

Basically it is induction based on emergent behaviour and test performance seen already from simply scaling (more data and more parameters). Many AI researchers are skeptical, but on the other hand the progress already seen has been pretty shocking. Most AI researchers think at a minimum it will have to be a combination of LLM+search; LLM+symbolic reasoning; LLM+planner; or more likely more complex designs etc. and plenty believe that additional breakthroughs are needed.

That is the thing that bothers me the most. Sure these are "multimodel" but we are still fundamentally iterating on LLM's here. At best we can do some tricks here or there where it can do other things, but that doesnt make it an AGI.Having followed AI off-and-on since the Prolog/Society of Mind days, I've never understood how a scaled up LLM is supposed to make the leap to AGI. Now, I'm not an AI researcher or scientist, but perhaps the answer is "it's not".

The real "safety" concerns are mostly about totally fucking up our society with fake generated content on social media and elsewhere. Which is quite bad enough for people to have some real concern over the fucking sociopathic techbros making it. Remember "Radio Rwanda"? A fucking genocide was basically incited by a fucking radio station.If OpenAI is no where near AGI, then it seems all the "safety" concerns are a bit unfounded. [...]

Yeah but we'll definitely have Full Self Driving next year.Meanwhile the rest of us over here in reality have long since known the truth: OpenAI and everyone else are blowing steam when they say they're "Close to AGI".