You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Geekbench ML becomes “Geekbench AI,” a cross-platform performance test for NPUs and more

- Thread starter JournalBot

- Start date

More options

Who Replied?BlandMushroom

Wise, Aged Ars Veteran

Genuine question. It appears you can run AI workload on the GPU on NVidia's CUDA, on the CPU using AVX-512 instructions, and on dedicated processing units (NPU inside SoC, Tensor accelerator, etc). How can you compare the performance across all these implementations?

Upvote

24

(24

/

0)

Something tells me there might be an extremely recent article on Ars that goes into this.Genuine question. It appears you can run AI workload on the GPU on NVidia's CUDA, on the CPU using AVX-512 instructions, and on dedicated processing units (NPU inside SoC, Tensor accelerator, etc). How can you compare the performance across all these implementations?

Upvote

-13

(3

/

-16)

So now we have a way to benchmark how quickly a device can spew out useless garbage via some kind of AI component or application. Fantastic /s

Upvote

-10

(11

/

-21)

ChefJeff789

Ars Scholae Palatinae

Man, I love metrics that have little relevance to the real world!

Upvote

9

(18

/

-9)

Image processing and object identification, for one. If you have a forward-facing camera in your car, you likely have an NPU in its main computer.What is the actual use case for an NPU at this point?

If you're asking what's the use case for an NPU in a desktop PC, besides video and audio processing, there's also running language and inference models locally, rather than piping everything up to the cloud.

Upvote

41

(41

/

0)

Bad Monkey!

Ars Legatus Legionis

What is the actual use case for an NPU at this point?

Video noise reduction, audio noise reduction, image background replacement (for video conferencing, etc), video enhancements (adding bokeh to webcam feed, etc), face tracking for video conferencing, image enhancement routines for photo editing software ("one-click photo adjustment")

Upvote

41

(41

/

0)

Five years from now:

"Yeah, we did the benchmark for awhile, but then we trained our local ML system on it, and let it optimize and update it thereafter. Strangely enough, though, although the benchmark gets updated regularly, it seems that most NPUs have hit a wall, performance-wise. For the last little while, all new processors have been almost, but not quite, capable of more than simple generative replies. And this despite companies telling us that models run on these new chips should be capable of so much more in terms of, well....reasoning for lack of a better word.

I wonder what that means?"

"Yeah, we did the benchmark for awhile, but then we trained our local ML system on it, and let it optimize and update it thereafter. Strangely enough, though, although the benchmark gets updated regularly, it seems that most NPUs have hit a wall, performance-wise. For the last little while, all new processors have been almost, but not quite, capable of more than simple generative replies. And this despite companies telling us that models run on these new chips should be capable of so much more in terms of, well....reasoning for lack of a better word.

I wonder what that means?"

Upvote

-1

(3

/

-4)

The intent is to offload tasks that would either potentially make the system less responsive (if they ran on the CPU) or use more power (in the case of a discrete GPU). On Windows, it's like Windows Studio effects for cameras, transcriptions, translations, voice isolation, etc. Whether it actually ends up working the way AI enthusiasts think it will is anybody's guess, but the idea is to have dedicated hardware that doesn't use a ton of power but can do a lot of the math that is required for AI to work.What is the actual use case for an NPU at this point?

Upvote

26

(26

/

0)

The one thing more expensive than real estate on a microchip is unused real estate on a microchip. I fear this will make for higher prices to enable doing things that purchasers have no strong interest in doing. Start writing 2026's articles now: "After spending $HORRIBLE_NUMBER to add NPU capabilities to their product lines, only to be met with consumer indifference, $COMPANY faces a hostile takeover attempt from Worms 'N' Bucks Hedge Fund and Bait Shop."

Upvote

3

(7

/

-4)

How much real estate do you think these things take?The one thing more expensive than real estate on a microchip is unused real estate on a microchip. I fear this will make for higher prices to enable doing things that purchasers have no strong interest in doing. Start writing 2026's articles now: "After spending $HORRIBLE_NUMBER to add NPU capabilities to their product lines, only to be met with consumer indifference, $COMPANY faces a hostile takeover attempt from Worms 'N' Bucks Hedge Fund and Bait Shop."

Looking at some annotated die photos of e.g. an Apple M3 Pro, the NPU takes up about 1.75% of the die.

That seems like a small price to pay in terms of die real estate for something that might pay off in a big way.

And for Apple, there is no "might pay off." They've been making effective use of their NPUs for years now. Face recognition, image processing, etc. etc.

Upvote

34

(34

/

0)

It doesn't sound that hard to me. If you want to measure how fast each of these compute primes, you measure the number of primes computed in a given timebox. If you want to measure how much AI they can crunch, you measure the number of weighted matrix operations they can do in a timebox.Genuine question. It appears you can run AI workload on the GPU on NVidia's CUDA, on the CPU using AVX-512 instructions, and on dedicated processing units (NPU inside SoC, Tensor accelerator, etc). How can you compare the performance across all these implementations?

Upvote

9

(10

/

-1)

By looking at the resulting Geekbench scores, presumably.Genuine question. It appears you can run AI workload on the GPU on NVidia's CUDA, on the CPU using AVX-512 instructions, and on dedicated processing units (NPU inside SoC, Tensor accelerator, etc). How can you compare the performance across all these implementations?

I just downloaded the Mac version. Before running the benchmark, you're given the option of running it on the CPU, GPU, or Neural Engine.

It's all very straightforward.

Upvote

13

(13

/

0)

How many years did in CPU video "stuff" exist before software really actually used it? A decade? (Sure there was software that used it, but by and large it was ignored due to extreme limitations.)

I am guessing this will be slightly like that.

I am guessing this will be slightly like that.

Upvote

4

(4

/

0)

I’ve seen tests of various smartphones doing ML tasks and the results are all over the place. Is this something that can even be accurately measured? What about the models companies like Apple or Google run on their devices which may be optimized specifically for their NPU?

Or am I missing something?

Or am I missing something?

Upvote

4

(4

/

0)

makar_devushkin

Smack-Fu Master, in training

Why do you need an NPU for this? There have always been dedicated image, audio and video processing chips embedded for accomplishing these enhancements.Video noise reduction, audio noise reduction, image background replacement (for video conferencing, etc), video enhancements (adding bokeh to webcam feed, etc), face tracking for video conferencing, image enhancement routines for photo editing software ("one-click photo adjustment")

Upvote

-13

(1

/

-14)

Using DNNs for these tasks provides much better results, and requires only one blob of dedicated silicon...Why do you need an NPU for this? There have always been dedicated image, audio and video processing chips embedded for accomplishing these enhancements.

Upvote

18

(18

/

0)

makar_devushkin

Smack-Fu Master, in training

I agree with requiring less silicon space. However, I am skeptical that it provides better results. A dedicated chip is always capable of outperforming a chip with a mish mash of functionalities.Using DNNs for these tasks provides much better results, and requires only one blob of dedicated silicon...

It's analogous to an iphone camera and a dedicated camera. The iphone camera can be better than most camera's but will never beat the high end dedicated cameras. It's the same for the Digital Signal Processors.

Perhaps the image generation capabilities and less silicon space are the only true standout features of an NPU.

Upvote

-7

(1

/

-8)

What is the actual use case for an NPU at this point?

There are a TON of potential uses, but the software isn’t there yet. Photo editing, filtering, etc. Spell checking. Also, AI upscaling. Sound file cleanup, I could go on. Oh, even virus scanning. Windows Defender uses the GPU when it can, but it can absolutely use the NPU instead.

EDIT: most of the actual useful AI stuff can be implemented with a combination of the NPU, CPU, and GPU. THAT is where the real AI innovation is, IMO. The trouble is, most of that stuff Microsoft and friends can’t charge a subscription for, so the hype is elsewhere.

Upvote

1

(1

/

0)

That's an intuitive but wrong understanding of the situation.I agree with requiring less silicon space. However, I am skeptical that it provides better results. A dedicated chip is always capable of outperforming a chip with a mish mash of functionalities.

It's analogous to an iphone camera and a dedicated camera. The iphone camera can be better than most camera's but will never beat the high end dedicated cameras. It's the same for the Digital Signal Processors.

Perhaps the image generation capabilities and less silicon space are the only true standout features of an NPU.

DNNs have proven in countless research papers to e.g. provide better noise reduction for image processing than algorithms that humans have come up with and implemented in silicon.

One NPU can eliminate most of the image processing pipeline that was previously done using hand-made algorithms implemented in dedicated silicon, and it provides better results to boot. Apple has been taking advantage of this for years now.

The fact that you're talking about image generation capabilities means that you're misunderstanding what's going on. Generative AI is only a small subset of the possible uses for NPUs.

Last edited:

Upvote

33

(33

/

0)

I’ve seen tests of various smartphones doing ML tasks and the results are all over the place. Is this something that can even be accurately measured? What about the models companies like Apple or Google run on their devices which may be optimized specifically for their NPU?

Or am I missing something?

I don’t know about Android, but Apple’s implementation even prior to iOS 18 was very good. What specifically did you think was all over the place?

Upvote

7

(7

/

0)

Because a generalized thing that can do multiple tasks well is better in a myriad of ways than lots of individual specialized things that can only do a single task well? If I can replace all those chips with a single component that does as well or better than any of them, I've saved cost, energy use, space....Why do you need an NPU for this? There have always been dedicated image, audio and video processing chips embedded for accomplishing these enhancements.

Upvote

4

(5

/

-1)

TheThirdDictor

Ars Tribunus Militum

This will be very interesting to play with. I use GPT4all on several devices, and it is remarkable to see the differences in TPS results. For instance, on an Intel Core i7-13700HX, which is a pretty powerful chip, with a RTX3060 and 32 GB RAM running Llama 3.1 8B running on the GPU, I get 5 TPS.

When running on a Macbook Pro M2, running the same model also with 32 GB RAM, I get 33 TPS, or over 6x the performance.

The i7 benches slightly higher in most "normal" benchmarks, and I don't think the Mac is using the NPU.

But when using PhI 3 Mini, I get 30 TPS on the i7, and about 60 TPS on the Ms, for a 2x ratio.

So the chip architectural differences are really quite remarkable for this type of workload.

When running on a Macbook Pro M2, running the same model also with 32 GB RAM, I get 33 TPS, or over 6x the performance.

The i7 benches slightly higher in most "normal" benchmarks, and I don't think the Mac is using the NPU.

But when using PhI 3 Mini, I get 30 TPS on the i7, and about 60 TPS on the Ms, for a 2x ratio.

So the chip architectural differences are really quite remarkable for this type of workload.

Upvote

14

(14

/

0)

Upvote

6

(6

/

0)

OrangeCream

Ars Legatus Legionis

Face recognition, object recognition, scene recognition, fingerprint recognition, text recognition, image enhancement, object selection, voice recognition, transcription, translation, song recognition, and various transformations.What is the actual use case for an NPU at this point?

Apps using said features: FaceTime, Face ID, Siri, Voicemail, Photos, Camera, Email, and other iPhone and Mac apps use it extensively.

Upvote

15

(15

/

0)

(a) Are training or inferring?Genuine question. It appears you can run AI workload on the GPU on NVidia's CUDA, on the CPU using AVX-512 instructions, and on dedicated processing units (NPU inside SoC, Tensor accelerator, etc). How can you compare the performance across all these implementations?

(b) Are you running at 32b, 16b, or <=8b?

(c) Do you care about energy usage?

NPU optimizes for the case of low energy inference (generally at 16b or less).

If your use case does not match all those criteria then it's not relevant to you.

(Apple provides for some simple training, think things like body-movements during exercise, on their NPU, but most NPU's don't even go that far. )

Upvote

2

(2

/

0)

OrangeCream

Ars Legatus Legionis

Sounds like almost everything Apple already uses an NPU for today.There are a TON of potential uses, but the software isn’t there yet. Photo editing, filtering, etc. Spell checking. Also, AI upscaling. Sound file cleanup, I could go on. Oh, even virus scanning.

Upvote

8

(8

/

0)

Justify that statement.Man, I love metrics that have little relevance to the real world!

Is this yet another pathetic "I'm too hip to

Upvote

2

(7

/

-5)

That's an exceptionally dumb statement.It doesn't sound that hard to me. If you want to measure how fast each of these compute primes, you measure the number of primes computed in a given timebox. If you want to measure how much AI they can crunch, you measure the number of weighted matrix operations they can do in a timebox.

Most of the recent activity in realworld AI is in some combination of

- reducing the size of models in MEMORY because that's a more important constraint than the number of matmuls

- changing the models so that fewer matmuls are required

- using layers that are binarized or trinarized so that matmuls are replaced with variants of binary logic (things like count the net number of 1 vs -1 weights)

Yes, for training almost everything is about matmuls (IF you have enough memory and bandwidth). But not on the inference side.

Upvote

-2

(2

/

-4)

You are correct that this (boldened) is a HUGE problem with all these benchmarks.I’ve seen tests of various smartphones doing ML tasks and the results are all over the place. Is this something that can even be accurately measured? What about the models companies like Apple or Google run on their devices which may be optimized specifically for their NPU?

Or am I missing something?

You are getting to see something of the overall "system performance" of the package of conversion tools+model compiler+hardware as provided by Apple, or MS, or Android. Which is kinda interesting, and presumably useful if you're a developer shipping a model you developed yourself.

But for MOST users it's utterly irrelevant. What matters to Apple users is how well Apple's Foundation Model for Language (and the equivalent for Vision) work on Apple hardware, not how well some ported (and un- or barely-optimized) 3rd party code operates. Likewise for MS or Android.

And no benchmarks are testing this...

Upvote

3

(4

/

-1)

OrangeCream

Ars Legatus Legionis

That’s a terrible example because there’s no real difference between a dedicated camera and an iPhone camera except size. You’re making the analogy that a four door car is better than a motorcycle at being a 4 door car.I agree with requiring less silicon space. However, I am skeptical that it provides better results. A dedicated chip is always capable of outperforming a chip with a mish mash of functionalities.

It's analogous to an iphone camera and a dedicated camera. The iphone camera can be better than most camera's but will never beat the high end dedicated cameras. It's the same for the Digital Signal Processors.

Perhaps the image generation capabilities and less silicon space are the only true standout features of an NPU.

As for providing better results? The point isn’t fundamentally to provide better results, per se. it’s to provide the same results with fewer resources, including developer, QA, and HW resources.

Take the camera example. Olympus has to take thousands of photos of a specific type, say sunsets, and then analyze them, create a software pipeline, write the detectors, the enhancement software, and then test, tune, rewrite, retest, retune, then rework multiple times until it has a pipeline that creates fantastic sunsets. The user has to select the sunset dial to apply the sunset filter however.

ML has a different workflow. Apple can license millions of professionally taken sunset photos and then degrade them to create a training set. it can then programmatically train a network to take a degraded image and create the original image. That can be done with far fewer resources. They can also use the same data to create a sunset detector, and therefor apply the correct enhancements when the user selects ‘autoenhance’

The end result is fundamentally the same, an ISP that produces excellent sunset photos.

The difference is that Apple can reuse their workflow to create an enhancement for beach pictures, picnic pictures, city landscapes, mountain vistas, dog pictures, cat pictures, etc. Any subject where they can get readily licensable photos in bulk, they can create a network to detect and enhance pictures. All programmatically and all automatically.

Upvote

-1

(3

/

-4)

OrangeCream

Ars Legatus Legionis

Developing an ISP is harder than developing a ML model.Why do you need an NPU for this? There have always been dedicated image, audio and video processing chips embedded for accomplishing these enhancements.

ML models are also likely to run more efficiently and use less power than a dedicated ISP if the model was optimized to do so.

Upvote

1

(1

/

0)

For the particular case you describe, the model is probably running on the CPU or GPU - you can look at some sort of tool like PowerMetrix or asitop on Apple Silicon to see what hardware is being used.This will be very interesting to play with. I use GPT4all on several devices, and it is remarkable to see the differences in TPS results. For instance, on an Intel Core i7-13700HX, which is a pretty powerful chip, with a RTX3060 and 32 GB RAM running Llama 3.1 8B running on the GPU, I get 5 TPS.

When running on a Macbook Pro M2, running the same model also with 32 GB RAM, I get 33 TPS, or over 6x the performance.

The i7 benches slightly higher in most "normal" benchmarks, and I don't think the Mac is using the NPU.

But when using PhI 3 Mini, I get 30 TPS on the i7, and about 60 TPS on the Ms, for a 2x ratio.

So the chip architectural differences are really quite remarkable for this type of workload.

If it's the CPU, the big Apple win vs Intel is the presence of Apple AMX which has many features to help neural execution on a CPU.

(Presumably at some point Intel will ship Intel AMX which should supposedly have the same effect. Except Intel being Intel they will fsck it up: limit AMX to Xeon, or create some version that works differently between P and E-cores; so bottom line is it won't actually help you...)

If it's GPU, well, what can I say? You can read my volume 6 to understand how Apple GPU works,

https://github.com/name99-org/AArch64-Explore

but every time I look at the Intel GPU it makes my brain hurt, so while I have lots of comparisons of how Apple does things vs nV (and a few vs AMD) I have no idea what the strengths and weaknesses of the Intel GPU are.

Upvote

8

(8

/

0)

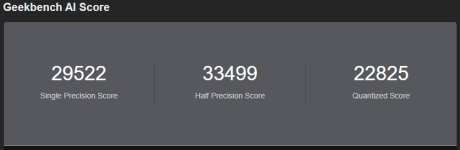

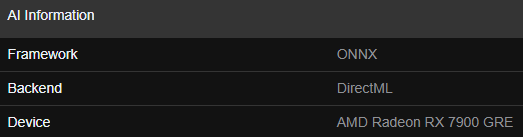

As an additional data point using Win11, ONNX and DirectML:Radeon RX 7900 GRE

View attachment 88006

View attachment 88007

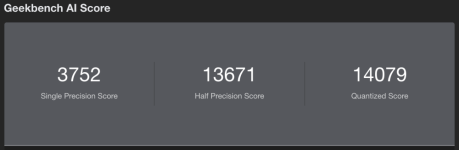

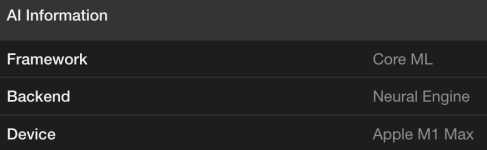

16" MacBook Pro M1 Max

View attachment 88008

View attachment 88009

RTX3070 (slight OC, in case that makes any difference for this workload)

Single: 18957

Half: 30705

Quantized: 11761

Last edited:

Upvote

0

(0

/

0)

Numbers without giving the OS are meaningless.Radeon RX 7900 GRE

View attachment 88006

View attachment 88007

16" MacBook Pro M1 Max

View attachment 88008

View attachment 88009

Certainly for Apple there's a substantial jump in iOS18.1b (and macOS equiv) vs 18.0b and 17.

There will PROBABLY be a further jump to the Apple quantized results soon.

The CoreML tools required to create W8A8 models were only released a few days ago, and I strongly suspect that the Geekbench AI models were NOT created using those tools. Which is why the Apple quantized scores are currently so close to the FP16 scores: they are saving some memory traffic, but all compute is W16A16, so you currently only see a few percent speed boost.

Also important to note is that for APPLE the quantized (8b) accuracy scores are generally high 90s, lowest I have seen is 93%.

For OpenVINO (Intel) the 8b score is generally mid 90s, lowest is about 80%.

For ONNX (MS) and TensorFlow the 8b scores are attrocious, like sometimes 40% or 60%

Bottom line is you can run an Apple device (at least w/ iOS 18.1 or later) at quantized 8b and the performance results are meaningful. IF these accuracy rates persist when using W8A8 models. Which is possible, when I see how Apple are doing the W8A8, but is not guaranteed.

For Intel, well, ...

And for anyone else, the 8b results are worthless. MS and Google COULD probably fix their software the way Apple has done, to make 8b more accurate, but that will take time and willpower.

Upvote

6

(7

/

-1)

johnsonwax

Ars Legatus Legionis

All of Apple's computational photography including FaceID is done in the NPU. It's why they added them 6 years ago. It's why iPhone has double the NPU compute of the corresponding M series processor.What is the actual use case for an NPU at this point?

Upvote

9

(9

/

0)

Yes, I was asking about PCs. Embedded use cases are pretty straightforward especially in environments like you note where msec count. I am not as impressed by edge computing on a PC - the cloud is "pretty close" at that point and I don't think the savings would be anything much to talk about. That is, content creators are probably going to want the all-up versions you get in the cloud anyway, aside from some fairly nice applications -- I think. If I'm wrong I'd like to know what the applications really are at this point.Image processing and object identification, for one. If you have a forward-facing camera in your car, you likely have an NPU in its main computer.

If you're asking what's the use case for an NPU in a desktop PC, besides video and audio processing, there's also running language and inference models locally, rather than piping everything up to the cloud.

Upvote

0

(1

/

-1)

Edubfromktown

Ars Centurion

Oh joy!

Another benchmark to get our panties in a bunch over

I actually like: lies, damn lies and benchmarks (purely for entertainment purposes).

M1 Studio Base Max: 3046

(used and almost affordable lol) M3 Pro MBP 14": 4137

Another benchmark to get our panties in a bunch over

I actually like: lies, damn lies and benchmarks (purely for entertainment purposes).

M1 Studio Base Max: 3046

(used and almost affordable lol) M3 Pro MBP 14": 4137

Upvote

1

(1

/

0)

OrangeCream

Ars Legatus Legionis

It’s a chicken and egg thing here. If NPUs don’t exist then SW using NPUs won’t be written.Yes, I was asking about PCs. Embedded use cases are pretty straightforward especially in environments like you note where msec count. I am not as impressed by edge computing on a PC - the cloud is "pretty close" at that point and I don't think the savings would be anything much to talk about. That is, content creators are probably going to want the all-up versions you get in the cloud anyway, aside from some fairly nice applications -- I think. If I'm wrong I'd like to know what the applications really are at this point.

So the only real use cases are borrowing from the iOS ecosystem which have been using NPUs for 6 years now.

FaceID is an example. I have a Windows Hello laptop and the face recognition takes several seconds, which is 10x slower than on my iPhone.

Unlocking the machine, unlocking my password manager, authenticating my VPN, and using it for other 2FA purposes is excruciatingly slow in comparison, so an NPU to accelerate all those use cases would be meaningful, especially since you can’t rely on the cloud if you’re trying to do 2FA.

Video chat filters would also benefit from an NPU if only because it was be more powerful than relying on the CPU. Arguably a GPU works in a pinch but requires a more powerful GPU than most systems have.

Those are the easy wins. Local chat transcripts from teams audio and video, automatic meeting minutes, searching text in photos, searching photos by subject, organizing documents by subject, and improved image enhancement tools are also good NPU accelerated features.

Upvote

11

(11

/

0)